CherryPy questions: testing, SSL and Docker

Basically this is a CherryPy work-in-progress article. However, it aims to draw the line with testing infrastructure I was working on, as it seems to be completed. The article covers what has been done and what issues I met along the way of reviving the test suite to serve its purpose. The way is not traversed fully of course and there’s a lot left to do. It also seeks to justify concerns about CherryPy SSL functionality’s correctness and viability. As well, it tries to answer appeared questions about official Docker image and its range of application.

Contents

Testing

As was discovered in Future of CherryPy quality assurance was a glaring problem. After I volunteered and started working on putting tests back in the right way, I’ve discovered several testing methodological errors that were made along in years of CherryPy existence. Also, back in the day CherryPy had its own test runner and later survived migration to Nose, but lost SSL tests. Not that the tests were purged away from the codebase, but werern’t possible to be run automatically and required a tester to change code to run tests with SSL. Obviously no one did it, and it led to several issues that rendered SSL functionality broken. Actually it still is, as at the moment of writing in latest CherryPy release, 3.6, SSL doesn’t work.

Besides testing issues, there were issues with CherryPy functionality as such. For instance, session file locking didn’t seem to be tested on machines with more than one execution unit. This way session locks didn’t work all the time on machines with a multi-core CPU.

You may be confused to think that as long as there is GIL [1], you shouldn’t care about number of CPUs. Unfortunately, serial execution doesn’t mean execution in one certain order. Thus on different number of execution units and on different GIL implementations (Python 3.2 has new GIL [2]) a threaded Python program will likely behave differently.

I’ve fixed file locking by postponing lock file removal to a cleanup process. Briefly covering issues with other session backends is to say Postgres session backend was decided to be removed because it was broken, untested and mostly undocumented. Memcached session backend was mostly fine, except some minor str/bytes issue in py3 and the fact it isn’t very useful in its current condition. The problem is that it doesn’t implement distributed locking, so once you have two or more CherryPy instances that use a Memcached server as a session backend and your users are not distributed consistently across the instances, all sorts of concurrent update issues may raise.

Substantial methodological errors in the test suite were:

- test determinism

- test dependency

- test isolation

Test determinism

There were garbage collection tests that were automatically added to every subclass of test.helper.CPWebCase. They are non-deterministic and fail sporadically (there are other normal tests that have non-deterministic behaviour, see below). Because CPython’s reference counting garbage collector is deterministic, it’s a concurrency issue. What was really surprising for me hear was explanation like, okay, if it has failed run it once again until you’re sure that the failure persists. This test_gc supplementing is disabled now until everything else is resolved, and it is clear how to handle it properly.

Another thing that prevented certain classification to pass and fail and led to frequent freezes were request retries and absence of HTTP client’s socket timeout. I can only guess about the original reasons of such “carefulness” and not letting a test just fail. For instance, you can take some of concurrency tests that throw out up to a hundred client threads and imagine stability and predictability of the undertaking with 10 retires and no client timeout. These two were also fixed — test.webtest.openURL doesn’t retry on socket errors and sets 10-second timeout to its HTTP connections by default.

test.helper.CPWebCase starts full in-process CherryPy server and then uses real httplib.HTTPConnection to connect to it. Most of CherryPy tests are in fact either integration [3] or system tests [4].

Test dependency

How do you like tests named like test_0_check_something, test_1_check_something_else, and so on? And what if they were named this way intentionally, so they run in order, and fail otherwise because latter depend on former. Specifically, you can not run test individually. All occurrence I’ve found were renamed and freed from sibling shackles.

Test isolation

test.helper.CPWebCase‘s server startup routine was made in equivalent of unittest‘s setUpClass, so tests in a test case were not isolated from each other, which made it problematic to deal with the case of tests that interfere with internals as it was really hard to say whether such test has failed on its own or as a consequence. I’ve added per_test_setup attribute to CPWebCase to conditionally do the routine in setUp and accommodated tests’ code. I apprehended significant performance degradation, but it has just become around 20% slower, which was the reason for me to make it true by default. Not sure, maybe it should’t exist at all now.

Unfortunately, this doesn’t resolve all isolation issues because having to do a complete reset of in-process threaded server is a juggling whatsoever. When a tricky test fails badly, it still has a side-effect (see below), like this:

./cherrypy/process/wspbus.py:233: RuntimeWarning: The main thread is exiting, but the Bus is in the states.STARTING state; shutting it down automatically now. You must either call bus.block() after start(), or call bus.exit() before the main thread exits.

Testing progress

What has just been said about fixed and improved stuff is happening in my fork [5], it is not yet pushed upstream. More detailed description and discussion about current and above sections is in the thread in CherryPy user group [6]. Because it’s there until the moment Google decides to shutdown Google Groups because of low traffic, abuse or any other reason they use for such an announcement, I will summarise the achievements in improving CherryPy testing infrastructure.

- Migrated tests to stdlib unittest, unitest2 as fallback for py26. Nose is unfriendly to py3 and probably was related to some locking issues.

- Overall improvement in avoiding freezes in various test cases and testing environments. TTY in Docker, disabled interactive mode, various internals’ tests wait in a thread, et cetera.

- Implemented “tagged” Tox 1.8+ configuration for matrix of various environments (see below).

- Integrated with Drone.io [7] CI service and Codecov.io [8] code coverage tracking service.

- Made various changes to allow parallel environment run with Detox to fit in 15 minutes of Drone.io free tier. It includes running tests in install directory [9], starting server each time on free port provided by OS, locking Memcached cases with an atomic operation and other.

- Removed global and persistent configuration that prevented mixing HTTP and HTTPS cases.

- Made it possible to work on the test suite in PyDev. When running tests in PyDev test runner, it adds another non-daemonic thread that tests don’t expect which leads to deadlocks or fails for 3 tests. They are now just skipped under PyDev runner.

Here’s Drone.io commands required to run the test suite. It uses Deadsnakes [10] to install old or not yet stable version of Python. Development versions are needed to build pyOpenSSL. The rest comes with Drone.io container out of the box.

echo 'debconf debconf/frontend select noninteractive' | sudo debconf-set-selections

sudo add-apt-repository ppa:fkrull/deadsnakes &> /dev/null

sudo apt-get update &> /dev/null

sudo apt-get -y install python2.6 python3.4 &> /dev/null

sudo apt-get -y install python2.6-dev python3.4-dev &> /dev/null

sudo pip install --quiet detox

detox

tox -e post

As you can see post environment closes the build. Unless the build was successful it won’t run and no coverage will be submitted or build artifacts become available. The build artifacts, what also needs to be listed in Drone.io, are several quality assurance reports that can be helpful for further improving the codebase:

coverage_report.txt coverage_report.html.tgz maintenance_index.txt code_complexity.txt

For Codecov.io integration to work, environment variable CODECOV_TOKEN should be assigned.

Tagged Tox configuration

Final Tox configuration looks like the following. It emerged through series of rewrites that were led by tradeoffs between test duration, combined coverage report and need to test dependencies. I came to the last design after I realised importance of support of pyOpenSSL and thus the need to test it (see about SSL issues below). Then test sampling facility was easy to integrate to it.

[tox]

minversion = 1.8

envlist = pre,docs,py{26-co,27-qa,33-nt,34-qa}{,-ssl,-ossl}

# run tests from install dir, not checkout dir

# http://tox.rtfd.org/en/latest/example/pytest.html#known-issues-and-limitations

[testenv]

changedir = {envsitepackagesdir}/cherrypy

setenv =

qa: COVERAGE_FILE = {toxinidir}/.coverage.{envname}

ssl: CHERRYPY_TEST_SSL_MODULE = builtin

ossl: CHERRYPY_TEST_SSL_MODULE = pyopenssl

sa: CHERRYPY_TEST_XML_REPORT_DIR = {toxinidir}/test-xml-data

deps =

routes

py26: unittest2

py{26,27}: python-memcached

py{33,34}: python3-memcached

qa: coverage

ossl: pyopenssl

sa: unittest-xml-reporting

commands =

python --version

nt: python -m unittest {posargs: discover -v cherrypy.test}

co: unit2 {posargs:discover -v cherrypy.test}

qa: coverage run --branch --source="." --omit="t*/*" \

qa: --module unittest {posargs: discover -v cherrypy.test}

sa: python test/xmltestreport.py

[testenv:docs]

basepython = python2.7

changedir = docs

commands = sphinx-build -q -E -n -b html . build

deps =

sphinx < 1.3

sphinx_rtd_theme

[testenv:pre]

changedir = {toxinidir}

deps = coverage

commands = coverage erase

# must be run separately after main envlist, because of detox

[testenv:post]

changedir = {toxinidir}

deps =

coverage

codecov

radon

commands =

bash -c 'echo -e "[paths]\nsource = \n cherrypy" > .coveragerc'

bash -c 'echo " .tox/*/lib/*/site-packages/cherrypy" >> .coveragerc'

coverage combine

bash -c 'coverage report > coverage_report.txt'

coverage html

tar -czf coverage_report.html.tgz htmlcov

- codecov

bash -c 'radon mi -s -e "cherrypy/t*/*" cherrypy > maintenance_index.txt'

bash -c 'radon cc -s -e "cherrypy/t*/*" cherrypy > code_complexity.txt'

whitelist_externals =

/bin/tar

/bin/bash

# parse and collect XML files of XML test runner

[testenv:report]

deps =

changedir = {toxinidir}

commands = python -m cherrypy.test.xmltestreport --parse

This design heavily relies on great multi-dimensional configuration that appeared in Tox 1.8 [11]. Given an environment, say py27-nt-ossl, Tox treats it like a set of tags, {'py27', 'nt', 'ossl'}, where each entry is matched individually. This way any environment, that makes sense though, with desired qualities can be constructed even if it isn’t listed in envlist. Here’s a table that explains the purpose of the tags.

| tag | meaning |

|---|---|

| nt | normal test run via unittest |

| co | compatibility run via unittest2 |

| qa | quality assurance run via coverage |

| sa | test sampling run via xmlrunner |

| ssl | run using Python bulitin ssl module |

| ossl | run using PyOpenSSL |

Note {posargs: discover -v cherrypy.test} part. It makes possible to run a subset of the test suite in desired environments, e.g.:

tox -e "py{26-co-ssl,27-nt,33-qa,34-nt-ssl}" -- -v cherrypy.test.test_http \

cherrypy.test.test_conn.TestLimitedRequestQueue \

cherrypy.test.test_caching.TestCache.test_antistampede

Tackling entropy of threading and in-process server juggling

Even though there was a progress on making test suite more predictable, and actually runnable, it is still far from desired, and I was scratching my head trying to figure out how to gather test stats from all the environments. Solution was in the lighted place, right where I was looking for it. There’s a package unittest-xml-reporting, whose name is pretty descriptive. It’s a unittest runner that writes XML files likes TEST-test.test_auth_basic.TestBasicAuth-20150410005927.xml with easy-to-guess content. Then it was just a need to write some glue code for overnight runs and implement parsing and aggregation.

I’ve implemented and incorporated module named xmltestreport [12] into the test package. sa Tox tag corresponds to it. Running the following in terminal at evening, at morning can give you the following table (except N/A evironments, see below).

for i in {1..64}; do tox -e "py{26,27,33,34}-sa{,-ssl,-ossl}"; done

tox -e report

| py26-sa | F | E | S |

| OK | |||

| py26-sa-ossl | F | E | S |

| test_conn.TestLimitedRequestQueue.test_queue_full | 27 | 0 | 0 |

| test_request_obj.TestRequestObject.testParamErrors | 1 | 0 | 26 |

| test_static.TestStatic.test_file_stream | 27 | 0 | 0 |

| py26-sa-ssl | F | E | S |

| test_caching.TestCache.test_antistampede | 0 | 33 | 0 |

| test_config_server.TestServerConfig.testMaxRequestSize | 0 | 1 | 32 |

| test_conn.TestLimitedRequestQueue.test_queue_full | 33 | 0 | 0 |

| test_static.TestStatic.test_file_stream | 33 | 0 | 0 |

| py27-sa | F | E | S |

| OK | |||

| py27-sa-ossl | F | E | S |

| test_conn.TestLimitedRequestQueue.test_queue_full | 33 | 0 | 0 |

| test_session.TestSession.testFileConcurrency | 1 | 0 | 32 |

| test_states.TestWait.test_wait_for_occupied_port_INADDR_ANY | 1 | 0 | 32 |

| test_static.TestStatic.test_file_stream | 33 | 0 | 0 |

| test_tools.TestTool.testCombinedTools | 2 | 0 | 31 |

| py27-sa-ssl | F | E | S |

| test_caching.TestCache.test_antistampede | 0 | 33 | 0 |

| test_config_server.TestServerConfig.testMaxRequestSize | 0 | 4 | 29 |

| test_conn.TestLimitedRequestQueue.test_queue_full | 33 | 0 | 0 |

| test_static.TestStatic.test_file_stream | 33 | 0 | 0 |

| test_tools.TestTool.testCombinedTools | 2 | 0 | 31 |

| py33-sa | F | E | S |

| test_conn.TestPipeline.test_HTTP11_pipelining | 0 | 16 | 17 |

| py33-sa-ossl | F | E | S |

| N/A | |||

| py33-sa-ssl | F | E | S |

| test_caching.TestCache.test_antistampede | 0 | 33 | 0 |

| test_static.TestStatic.test_file_stream | 33 | 0 | 0 |

| test_tools.TestTool.testCombinedTools | 3 | 0 | 30 |

| py34-sa | F | E | S |

| test_conn.TestLimitedRequestQueue.test_queue_full | 0 | 1 | 32 |

| test_conn.TestPipeline.test_HTTP11_pipelining | 0 | 14 | 19 |

| py34-sa-ossl | F | E | S |

| N/A | |||

| py34-sa-ssl | F | E | S |

| test_caching.TestCache.test_antistampede | 0 | 32 | 0 |

| test_config_server.TestServerConfig.testMaxRequestSize | 0 | 1 | 31 |

| test_static.TestStatic.test_file_stream | 32 | 0 | 0 |

| test_tools.TestTool.testCombinedTools | 3 | 0 | 29 |

Actually, as you can see, it only had time for half of the iterations, and at the morning I interrupted it. Sylvain, you see, I mentioned those three tests in the group correctly. But there’re other candidates and this new arisen fragility of SSL sockets, if I understand it correctly. I mean all the tests with one fail or error. For example, test_session.TestSession.testFileConcurrency which is fine lock-wise but because it stresses networking layer with several dozen client threads, there’re two socket errors:

Exception in thread Thread-1098:

Traceback (most recent call last):

File "/usr/lib64/python2.7/threading.py", line 810, in __bootstrap_inner

self.run()

File "/usr/lib64/python2.7/threading.py", line 763, in run

self.__target(*self.__args, **self.__kwargs)

File "py27-sa-ossl/lib/python2.7/site-packages/cherrypy/test/test_session.py", line 282, in request

c.endheaders()

File "/usr/lib64/python2.7/httplib.py", line 969, in endheaders

self._send_output(message_body)

File "/usr/lib64/python2.7/httplib.py", line 829, in _send_output

self.send(msg)

File "/usr/lib64/python2.7/httplib.py", line 805, in send

self.sock.sendall(data)

File "/usr/lib64/python2.7/ssl.py", line 293, in sendall

v = self.send(data[count:])

File "/usr/lib64/python2.7/ssl.py", line 262, in send

v = self._sslobj.write(data)

error: [Errno 32] Broken pipe

Exception in thread Thread-1099:

Traceback (most recent call last):

File "/usr/lib64/python2.7/threading.py", line 810, in __bootstrap_inner

self.run()

File "/usr/lib64/python2.7/threading.py", line 763, in run

self.__target(*self.__args, **self.__kwargs)

File "py27-sa-ossl/lib/python2.7/site-packages/cherrypy/test/test_session.py", line 282, in request

c.endheaders()

File "/usr/lib64/python2.7/httplib.py", line 969, in endheaders

self._send_output(message_body)

File "/usr/lib64/python2.7/httplib.py", line 829, in _send_output

self.send(msg)

File "/usr/lib64/python2.7/httplib.py", line 805, in send

self.sock.sendall(data)

File "/usr/lib64/python2.7/ssl.py", line 293, in sendall

v = self.send(data[count:])

File "/usr/lib64/python2.7/ssl.py", line 262, in send

v = self._sslobj.write(data)

error: [Errno 104] Connection reset by peer

Other error details are available in XML reports which retain such details. You may think that I have found the reason for “carefulness” I questioned above, but I woulnd’t agree for several reasons. First, it looks like an SSL-only and also needs investigation — someone knows practical reasons for the SSL server and client to be more fragile? Second, if a test stresses server with a few dozens of client threads, regardless of SSL, it should be written in a way that tolerates failures for some of them rather than relying on magic recovery behind the scenes. And after all, all this integration testing is quite useless if we assume we’re fine having 9/10 of the client to fail in any test.

Status indication

Long story told short, I have changed my mind on status badges. After thinking a lot about organising project’s status indication and notification, where most important is regression notification when we have contributors who used to commit without running tests, I came to conclusion that status badges can do the job.

This way every contributor will know that an introduced regression will become a public knowledge in a matter of minutes. And that it won’t make other members of the community happy about it. Hopefully, this as a form of enforcement of standards and new testing tools will help us go smoother.

Another thing that made me thinking about status indication was March Google Code shutdown announcement [13]. For my Open Source code I was mostly using Google Code’s Mercurial. It was stable and minimal environment without distraction. Although it wasn’t a substantially maintained and over time it had become lacking several features, however it still has several exclusive ones. Essentially it was a project hosting in contrast to Bitbucket and Github which are a repository hosting. One could have as many repositories inside a project as needed. I know it is also feasible with Mercurial named branches, but it still feels a little denser than it should be. Another handy thing was that Google served raw content with appropriate MIME, e.g. JavaScript with text/javascript, thus allowed right-off-the-repo deployment for various testing purposes.

For status indication I used external links, the block in left side bar on main project page. You could say here is PyPi page, here’s CI, and here’s test coverage tracking. Because it was visible, in 1-click distance and parallel to project description it was sufficient.

Drawing the line

As far as I see, testing infrastructure is ready. I also made several related changes here and there. Now I think it is a good time to put it into upstream. First, of course for other contributors to make use of the new tools. Second, for the sake of granularity because there’s already a bunch of changes since January in my fork. And third, in coming months it is likely that I won’t have much time for contribution, thus it’s good time to discuss the changes if we need to.

SSL

As a part of discussion of design decisions made in the test suite [6], Joseph Tate, another CherryPy contributor expressed opinion that SSL support in CherryPy should be deprecated. Basically the message is about how can we guarantee security of the implementation if we can’t even guarantee its functionality.

Later in discussion with Joseph when I stood on fact that CherryPy’s SSL adapters are a wrapper on wrapper, Python ssl or pyOpenSSL on OpenSSL, and we don’t need a professional security expert in the community or special maintenance, he relaxed his opinion.

Distros have patched OpenSSL to remove insecure stuff, so maybe this is not as big a concern as I’m making it out to be, but the lack of an expert on this code is hindering its viability.

Also I want to inform Joseph, that his knowledge of CherryPy support for intermediate certificates isn’t up to date. cherrypy.server.ssl_certificate_chain is documented [14] and was there at least since 2011. I’ve verified it works in default branch.

Anyway, I took Joseph’s questions seriously and I’m sure we should not in any way give our users false sense of security. Security here is in theoretical sense as the state of art. I’m neither about zero-day breaches in algorithms and implementations, nor about fundamental problem of CA trust, no-such-agencies and generally post-Snowden world we live in.

On the other hand I believe that having pure Python functional (possibly insecure in theoretical sense) HTTPS server is beneficial for many practical use cases. There’re various development tools that are HTTPS-only unless you fight them. There’re environments where you have Python but can’t install other software. There’re use cases then any SSL, as an obfuscation, is fairly enough secure.

CherryPy is described and known as a complete web server and such reduction would be a big loss. And as I will show you below, although there’re several issues it is possible to workaround them and configure CherryPy to serve an application over HTTPS securely even today.

Experiment

Recently there was a CherryPy StackOverflow question right about the topic [15]. The guy asked how to block SSL protocols in favour of TLS. It led me to coming to the following quick-and-dirty solution.

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import os

import sys

import ssl

import cherrypy

from cherrypy.wsgiserver.ssl_builtin import BuiltinSSLAdapter

from cherrypy.wsgiserver.ssl_pyopenssl import pyOpenSSLAdapter

from cherrypy import wsgiserver

if sys.version_info < (3, 0):

from cherrypy.wsgiserver.wsgiserver2 import ssl_adapters

else:

from cherrypy.wsgiserver.wsgiserver3 import ssl_adapters

try:

from OpenSSL import SSL

except ImportError:

pass

ciphers = (

'ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+HIGH:'

'DH+HIGH:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+HIGH:RSA+3DES:!aNULL:'

'!eNULL:!MD5:!DSS:!RC4:!SSLv2'

)

bundle = os.path.join(os.path.dirname(cherrypy.__file__), 'test', 'test.pem')

config = {

'global' : {

'server.socket_host' : '127.0.0.1',

'server.socket_port' : 8443,

'server.thread_pool' : 8,

'server.ssl_module' : 'custom-pyopenssl',

'server.ssl_certificate' : bundle,

'server.ssl_private_key' : bundle,

}

}

class BuiltinSsl(BuiltinSSLAdapter):

'''Vulnerable, on py2 < 2.7.9, py3 < 3.3:

* POODLE (SSLv3), adding ``!SSLv3`` to cipher list makes it very incompatible

* can't disable TLS compression (CRIME)

* supports Secure Client-Initiated Renegotiation (DOS)

* no Forward Secrecy

Also session caching doesn't work. Some tweaks are posslbe, but don't really

change much. For example, it's possible to use ssl.PROTOCOL_TLSv1 instead of

ssl.PROTOCOL_SSLv23 with little worse compatiblity.

'''

def wrap(self, sock):

"""Wrap and return the given socket, plus WSGI environ entries."""

try:

s = ssl.wrap_socket(

sock,

ciphers = ciphers, # the override is for this line

do_handshake_on_connect = True,

server_side = True,

certfile = self.certificate,

keyfile = self.private_key,

ssl_version = ssl.PROTOCOL_SSLv23

)

except ssl.SSLError:

e = sys.exc_info()[1]

if e.errno == ssl.SSL_ERROR_EOF:

# This is almost certainly due to the cherrypy engine

# 'pinging' the socket to assert it's connectable;

# the 'ping' isn't SSL.

return None, {}

elif e.errno == ssl.SSL_ERROR_SSL:

if e.args[1].endswith('http request'):

# The client is speaking HTTP to an HTTPS server.

raise wsgiserver.NoSSLError

elif e.args[1].endswith('unknown protocol'):

# The client is speaking some non-HTTP protocol.

# Drop the conn.

return None, {}

raise

return s, self.get_environ(s)

ssl_adapters['custom-ssl'] = BuiltinSsl

class Pyopenssl(pyOpenSSLAdapter):

'''Mostly fine, except:

* Secure Client-Initiated Renegotiation

* no Forward Secrecy, SSL.OP_SINGLE_DH_USE could have helped but it didn't

'''

def get_context(self):

"""Return an SSL.Context from self attributes."""

c = SSL.Context(SSL.SSLv23_METHOD)

# override:

c.set_options(SSL.OP_NO_COMPRESSION | SSL.OP_SINGLE_DH_USE | SSL.OP_NO_SSLv2 | SSL.OP_NO_SSLv3)

c.set_cipher_list(ciphers)

c.use_privatekey_file(self.private_key)

if self.certificate_chain:

c.load_verify_locations(self.certificate_chain)

c.use_certificate_file(self.certificate)

return c

ssl_adapters['custom-pyopenssl'] = Pyopenssl

class App:

@cherrypy.expose

def index(self):

return '<em>Is this secure?</em>'

if __name__ == '__main__':

cherrypy.quickstart(App(), '/', config)

After I regenerated our test certificate to:

- use longer than 1024-bit key

- not use MD5 and SHA1 (also deprecated [26]) for signature

- use valid domain name for common name

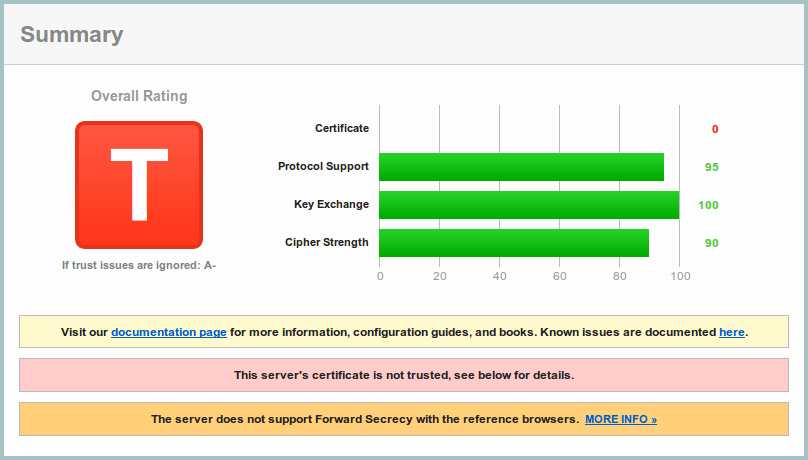

Using the following versions, we have A- for the customised pyOpenSSL adapter on Qualys SSL Server Test [34]:

- Debian Wheezy (stable)

- Python 2.7.3-4+deb7u1, ssl.OPENSSL_VERSION == 'OpenSSL 1.0.1e 11 Feb 2013'

- OpenSSL 1.0.1e-2+deb7u16

- pyOpenSSL 0.14

- CherryPy from default branch (pre 3.7)

There’s no error, but the warnings:

- Secure Client-Initiated Renegotiation — Supported, DoS DANGER [16]

- Downgrade attack prevention — No, TLS_FALLBACK_SCSV not supported [17]

- Forward Secrecy — No, WEAK [18]

Here’s what Wikipedia says [25] about forward secrecy, to inform you that it is not something critical or required for immediate operation.

In cryptography, forward secrecy is a property of key-agreement protocols ensuring that a session key derived from a set of long-term keys cannot be compromised if one of the long-term keys is compromised in the future.

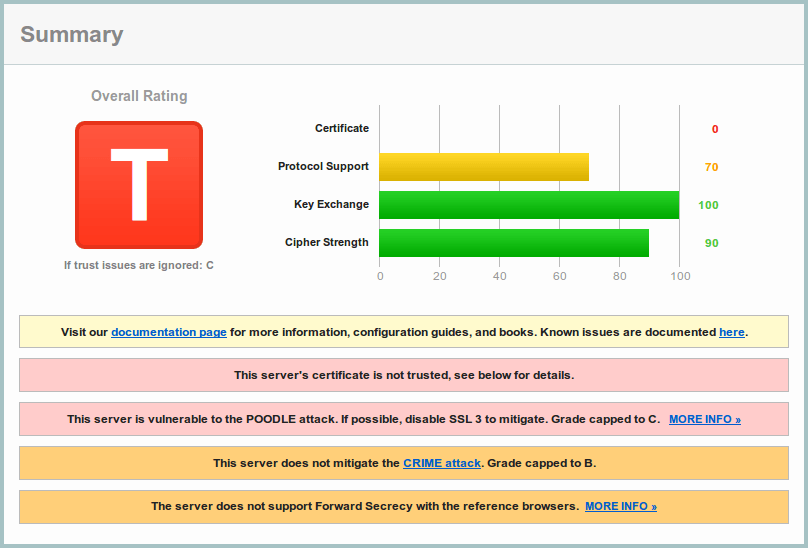

And here’s what can be squeezed out of built-in ssl, which is grievous.

Here goes the what-is-wrong list:

There’s also half-implemented session resumption — No (IDs assigned but not accepted). And the same three warnings as for PyOpenSSL apply. ssl_builtin doesn’t handle underlying ssl module exceptions correctly, as the log was flooded by exceptions originating mostly from do_handshake(). PyOpenSSL’s log was the way more quiet with just a couple of page accesses.

[27/Mar/2015:14:07:49] ENGINE Error in HTTPServer.tick

Traceback (most recent call last):

File "venv/local/lib/python2.7/site-packages/cherrypy/wsgiserver/wsgiserver2.py", line 1968, in start

self.tick()

File "venv/local/lib/python2.7/site-packages/cherrypy/wsgiserver/wsgiserver2.py", line 2035, in tick

s, ssl_env = self.ssl_adapter.wrap(s)

File "./test.py", line 53, in wrap

ssl_version = ssl.PROTOCOL_SSLv23

File "/usr/lib/python2.7/ssl.py", line 381, in wrap_socket

ciphers=ciphers)

File "/usr/lib/python2.7/ssl.py", line 143, in __init__

self.do_handshake()

File "/usr/lib/python2.7/ssl.py", line 305, in do_handshake

self._sslobj.do_handshake()

SSLError: [Errno 1] _ssl.c:504: error:1408A0C1:SSL routines:SSL3_GET_CLIENT_HELLO:no shared cipher

SSLError: [Errno 1] _ssl.c:504: error:14076102:SSL routines:SSL23_GET_CLIENT_HELLO:unsupported protocol

SSLError: [Errno 1] _ssl.c:504: error:1408F119:SSL routines:SSL3_GET_RECORD:decryption failed or bad record mac

SSLError: [Errno 1] _ssl.c:504: error:14094085:SSL routines:SSL3_READ_BYTES:ccs received early

But nothing is really that bad even with ssl_builtin. Let’s relax stability policy, make sure you understand the consequences if you’re going to do it on a production server, and add Debain Jessie’s (testing) source to /etc/apt/sources.list of the server (Debian Wheezy) and see what next Debain has in its briefcase:

- Python 2.7.9-1, ssl.OPENSSL_VERSION == 'OpenSSL 1.0.1k 8 Jan 2015'

- Python 3.4.2-2, ssl.OPENSSL_VERSION == 'OpenSSL 1.0.1k 8 Jan 2015'

- OpenSSL 1.0.1k-3

- pyOpenSSL 0.15.1 (installed via pip, probably has been updated since)

For you information, related excerpt from Python 2.7.9 release change log [21]. It was in fact a huge security update, not very well represented by the change of a patch version.

- The entirety of Python 3.4’s ssl module has been backported for Python 2.7.9. See PEP 466 [22] for justification.

- HTTPS certificate validation using the system’s certificate store is now enabled by default. See PEP 476 [23] for details.

- SSLv3 has been disabled by default in httplib and its reverse dependencies due to the POODLE attack.

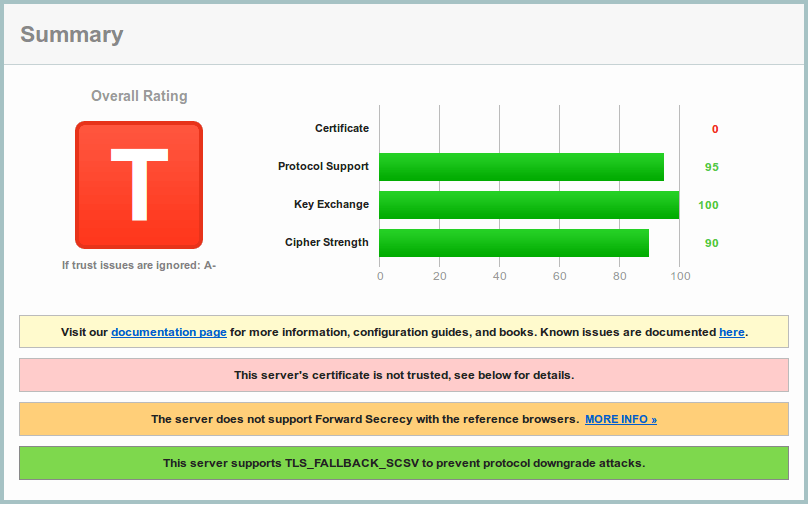

All errors have gone, and in spite of the warning it supports forward secrecy for most clients. Here are just couple of warnings left:

- Secure Client-Initiated Renegotiation — Supported, DoS DANGER [16]

- Session resumption (caching) — No (IDs assigned but not accepted)

The result is pretty good. If you run it on plain ssl_builtin, is has B, because RC4 ciphers [24] aren’t excluded by default. Python 3.4.2 test on the customised ssl_builtin is obviously the same A- because both underlying libraries are the same. Customised ssl_pyopenssl on Python 2.7.9 gives same A- — again it has functional session resumption but doesn’t have forward secrecy for any client.

Problem

After the experiment has taken place, it is time to generalise the results. Here’s what is wrong with CherryPy’s SSL support now:

- giving false sense of security

- not flexible configuration: protocol, ciphers, options

- not up to date with builit-in ssl (no SSL Context [27] support)

- no support for pyOpenSSL for py3

- no security assessment

Plan

I think that the problem is solvable with a reasonable effort. What has been said above may already have convinced you that no special cryptography knowledge is required. There’s a scalar letter grade for A to F and several design decisions aimed at flexibility and compatibility.

Outright state of affairs

Now we have the following note in SSL support section [14] in Deploy documentation page.

You may want to test your server for SSL using the services from Qualys, Inc.

To me after doing this research it doesn’t even look like a half-true thing expressed modestly. It looks we lie to users, giving them false sense of security. It may look like we’re so sure that it’s okay that we let them do a voluntary task of checking it once again, just in case. But in fact it is to deploy their py2 application and see it’s vulnerable (I assume Python 2.7.9 isn’t going to updated to soon for various reasons). This is absolutely redundant and we can tell that Python < 2.7.9 is a insecure platform right away. There should be a warning in documentation, like “Please update to Python 2.7.9, install pyOpenSSL or start off with Python 3.4+. Otherwise it will only obfuscate your channel”.

Python < 2.7.9 should be considered an insecure platform with appropriate Python warning, see below.

Configuration

cherrypy.server in addition to ssl_certificate, ssl_certificate_chain, ssl_private_key should support:

- ssl_cipher_list available for all Python versions and both adapters. Mostly needed for Python < 2.7.9.

- ssl_context available for Python 2.7.9+ and 3.3+. pyOpenSSL is almost there. SSL Context [27] allows user to set: protocol, ciphers, options.

SSL Context [27] when not provided from user should be created with ssl.create_default_context which takes care of security defaults. It is available for Python 2.7.9+ and 3.4+. For Python 3.3 context should be configured at CherryPy side (the function is two dozen of lines [29]).

ssl_adapters should be available at cherrypy.wsgiserver for user be able to set custom adapter (see the code above). Already implemented in my fork.

Built-in SSL adapter update

The adapter should make use of PEP 466 [22]. This way, inside it may be two adapters. One for Python 2.7.9+ with SSL Context [27] support and one for Python < 2.7.9. The latter should be explicitly documented as vulnerable and emit warning when used. Documentation and warning text can be taken from urllib3‘s InsecurePlatformWarning [30].

The adapter should handle SSL exceptions according to the protocol, not flooding the log with them.

Python 3 support for pyOpenSSL adapter

Purpose of pyOpenSSL adapter should be rethought as Python < 2.6 is no longer supported and Python 2.6+ has ssl module built in. Thus the purpose is not to provide a fallback. The purpose is to provide more flexibility for users to manage their own security. Python binary that comes from a disto’s repository have their own version of OpenSSL. When an OpenSSL breach is discovered it is reasonable to expect patched OpenSSL package to be shipped first. This gives an user ability to rebuild pyOpenSSL with latest OpenSSL as soon as possible in matter of doing:

pip install -I pyOpenSSL

Embracing this purpose Python 3 support should be provided. Currently pyOpenSSLAdapter.makefile uses wsgiserver.CP_fileobject directly and uses wsgiserver.ssl_pyopenssl.SSL_fileobject which inherits from wsgiserver.CP_fileobject. wsgiserver.CP_fileobject is available only in wsgiserver.wsgiserver2. Therefore pyOpenSSLAdapter.makefile should use wsgiserver.CP_makefile in py3 just like BuiltinSSLAdapter does. Here an advice or involvement of original authors is likely to be needed because it’s unclear in what circumstances pyOpenSSLAdapter.makefile should return non-SSL socket.

Automated security assessment

Debian package of OpenSSL is described as:

[When] you need it to perform certain cryptographic actions like… SSL/TLS client and server tests.

There are also things like sslyze [31], which is described as fast and full-featured SSL scanner. I think at some extent this approach will give automated security test, but it’s an rough road. Basically, the quality of results will depend on our proficiency and maintenance of the chosen tool. Much easier way is to you a service.

Luckily, in January Qualys announced SSL Labs API beta [32]. At the dedicated page [33] there’re links to official command like client and protocol manual. It makes sense to expect the same behaviour from the API as the normal tester [34] has. If so it won’t work on bare IP address and on non 443 port.

To externalise this idea we have some prerequisite. If there’s real CherryPy instance running behind Nginx on cherrypy.dev at WebFaction, then do we have access to it? Can we run several additional instances there? If not, can we run them somewhere else?

If think of instances for the following environments:

- py2-ssl-insecure.ssl.cherrypy.dev with Python < 2.7.9 that will probably be stable version in distos’ repositories for quite some time

- py2-ssl-secure.ssl.cherrypy.dev with Python 2.7.9+

- py2-pyopenssl-secure.ssl.cherrypy.dev with any py2 with pyOpenSSL

- py3-ssl-secure.ssl.cherrypy.dev with Python 3.4+

- py3-pyopenssl-secure.ssl.cherrypy.dev with any py3 with pyOpenSSL once it’s available

Then we need to multiplex them to outer port 443 because likely it’ll be running on single server. Because Nginx doesn’t seem to be able passthrough upstream SSL connections [35] we need to employ something else, maybe HAProxy [36] [37].

What’s left to do thereafter is to implement SSL Labs API, run in some schedule and we’re done. It is able to give us detailed and actual reports on every environment that can be exposed to users to make their decisions.

Docker

People are running in circles, screaming “DevOps”. Besides over half of them also yell “Docker” at odd turns.

Scene of the day; Baron “Xaprb” Schwartz authored an article about DevOps identity crisis [38] which makes it all feel this disordered. Or from the other end, at LinuxCon 2014 talk founder and now CTO of Docker, Inc. Solomon Hykes presented [39]:

I know 2 things about Docker: it uses Linux containers, and the Internet won’t shut up about it.

And all you want in the end of the day to all the these people really shut up and stop touting you their magic medicine. But as long as Sylvain started dedicated topic for collecting feedback about CherryPy’s official Docker image [40], I was one who unintentionally started this discussion (we talked about Drone.io test environment which is a Docker), and wanted to reply anyway, I started investigating what the thing that causes itch not only to all the cool kids is really about, except it eliminates ops as a class and cures indigestion by the way.

I have to admit that as soon as I have gotten to the original source of information, have listened to a couple of Solomon’s interviews and talks and seen how he was involved into Docker reputation management by replying to various critical articles, I became more well-disposed toward the project. Solomon himself looks like a positive and nice kind of guy who knows what he is doing. Not as nice, actually, as if he hadn’t rewritten DotCloud’s Python code to next Google’s attempt at language engineering, though reasonably nice anyway. You may often see him saying something like this [41]:

I know that Docker is pretty hyped right now — I personally think it’s a mixed blessing for exactly this reason: unexperienced people are bound to talk about Docker, and say stupid things about what Docker can do and how to use it. I wish I could magically prevent this.

So I ought to limit myself in saying stupid things ;-)

Nature

Basically Docker is like Vagrant + OpenVZ on vanilla kernel. The two are available for quite some time and have established use cases, reproducible development environment and lightweight, operating-system-level virtualisation [42], respectively. Although Docker combines the two qualities, and it’s tempting to (re)think that developers can build production-ready container images and deploy them through Docker magic powers, and that it’s what Docker is designated for, it would be a mistake to say so.

As Solomon says, Docker is mere a building block that comes in at the right time and provides high-level APIs for set of great low-level features modern Linux kernel has. There are several things I want to summarise from his latest interview given to FLOSS Weekly in episode 330 [43]:

- it is not a silver bullet, it is a building block

- it is used both ways: as VM and as “binary” (process-per-container); neither is the “true” one

- it is neither superior nor mutually exclusive with configuration management

- it is not a complete production solution; production is hard and is left to the reader

- it doesn’t primarily target homogenous applications; the platform’s tools may be sufficient

I would agree that except first one, one might find “however” to any of them. And especially, considering the pace of development, and promising trio of new experimental tools, that appeared to come along Docker for cluster orchestration, in Unix-way of loosely coupled components and responsibility separation: Machine, Swarm and Compose [44].

And I really like to think about Docker as a building block. No matter what the hype is, even though it entails imbalance in available information, there’re certain design decisions made that have certain implications. Taking them into account you decide whether Docker fits your certain task. For instance, there was a good comparison, LXC versus Docker, by Flockport [45], which emphasises the difference between the two as long as Docker goes with own libcontainer:

- performance penalty for a layered filesystem (e.g. AUFS)

- separate persistence management for immutable containers

- process-per-container design has issues with real world [46]:

- most of existing software is written in expectation of init system

- monitoring, cron jobs, system logging need a dedicated daemon and/or SSH daemon

- system built of subsystems built of components is much harder to manage

As I said above both process-per-container and application-per-container both have merit. But because Docker originally was conceived with the former idea, most images in Docker Hub are process-per-container and people begin to fight the tool they have chosen by using Bash scripts, Monit or Supervisor to manage multiple processes.

Complete configuration management products, like Ansible, or even simpler Fabric-based approaches in conjunction with abundance of API for every single IaaS and PaaS provider made available infrastructure as code with any desired quality long before Docker appeared. Big companies were implementing their own infrastructure-as-code solutions on top of chosen service provider. Smaller companies mostly weren’t in the game. Docker, because of its momentum, can standardise service providers’ supply and popularise infrastructure as code in the industry which I see quite advantageous.

Another thing that shoundn’t fall out of your sight is that Docker is a new technology. It has known and significant risk for new security issues [47]. And even in its own realm it is not as nearly as perfect. Indeed Docker makes clearer responsibility separation between devs and ops. But there are cross-boundary configuration, like maximum open file handles or system networking settings which need to be set on host and have corresponding configuration in container, e.g. MySQL table cache. There are environment-specific configuration like load testing in QA and rate-limiting in production. There is a lot more of such things to consider in real system.

There is a lot of discussion of microservice architecture [48] today. One of its aspects is that what was inner-application complexity is now shifted into infrastructure. And the more you follow this route the more you immerse into distributed computing while distributed computing is still very hard. Docker guidelines go clearly along the route.

Lately I’ve seen a comprehensive and all around excellent presentation on distributed computing by Jonas Bonér [49]. Although it’s named “The Road to Akka Cluster and Beyond” the Akka, which is distributed application toolkit for JVM, part starts around 3/4 of the slides. The rest is overview, theoretical aspects and practical challenges of distributed computing.

Last thing to say in this section is about Docker’s competitors. There are obviously other fish in the sea. Besides already mentioned LXC [50], there are also newer container tools built on experience with existing tools, realising their shortcomings and addressing them. To name a few: Rocket [51], Vagga [52].

Application

With that being said I think thorough discussion of infrastructure and distributed application design within the scope of CherryPy project is not only a off-topic. It’s a hugely out of the context. However the topic itself is actual and interesting, but I’m sure everyone who is dealing or is willing to deal with an application at scale knows how to run pip install cherrypy.

What I already told to Sylvain, is on-topic and CherryPy QA can benefit from is Docker QA image with complete CherryPy test suite containing all environments and dependencies so every contributor can take it and run the test suite against introduced changes effortlessly. Here’s a dockerfile for the purpose.

FROM ubuntu:trusty

MAINTAINER CherryPy QA Team

ENV DEBIAN_FRONTEND=noninteractive

RUN apt-get update

RUN apt-get --no-install-recommends -qy install python python-dev python3 python3-dev \

python-pip python-virtualenv

RUN apt-get --no-install-recommends -qy install software-properties-common build-essential \

libffi-dev mercurial ca-certificates memcached

RUN add-apt-repository ppa:fkrull/deadsnakes

RUN apt-get update

RUN apt-get --no-install-recommends -qy install python2.6 python2.6-dev python3.3 python3.3-dev

RUN pip install "tox < 2"

RUN pip install "detox < 0.10"

WORKDIR /root

RUN hg clone --branch default https://bitbucket.org/saaj/cherrypy

WORKDIR /root/cherrypy

RUN echo '#!/bin/bash\n \

service memcached start; hg pull --branch default --update; tox "$@"' > serial.sh

RUN echo '#!/bin/bash\n \

service memcached start; hg pull --branch default --update; detox "$@"' > parallel.sh

RUN chmod u+x serial.sh parallel.sh

ENTRYPOINT ["./serial.sh"]

hg clone line should be changed to the source of changes. Thus I think we should distribute it just as a dockerfile. Because of development dependencies it isn’t small — ~620 MB. I also made parallel Detox run possible, parallel.sh, but I think usually running an environment at a time is better to stress tests with more concurrency. What’s really nice about it is that it can be run just like a binary.

docker run -it cherrypy/qa -e "py{26-co-ssl,27-nt,33-qa,34-nt-ssl}" \

-- -v cherrypy.test.test_http cherrypy.test.test_conn.TestLimitedRequestQueue

Side-project

As I said, I think that certain answers to questions of infrastructure, orchestration or application design are off-topic inside the project. CherryPy was never meant to answer them. But it’s really nice to have all this information available nearby. Like the series of posts Sylvain wrote in his blog [53].

Sylvain, I can give you a starting point if you wish. I have a CherryPy project, cherrypy-webapp-skeleton [54], which is a complete, traditional CherryPy deployment on Debain. I had a fabfile [55] there which I used test the tutorial against a fresh Debain virtual machine. What I did is I basically translated in into a dockerfile [56]. It’s a virtual machine style, application-per-container thing, and it’s good for testing. It is also one of possible ways of deployment, but it obviously doesn’t provide means to scaling. So if you’re interested in splitting it to separate containers and providing configuration for containers and orchestration for such an example I would surely accept your changes. You’re also free to point me if you see something is wrong there — it’s all discussable.

In case you’re interested I’ll give an overview of the project and at the same time address some of your questions:

- components as-is: CherryPy, Nginx, MySQL, Monit

- containers to-be: 2 x CherryPy, Nginx, MySQL

- it runs through init script which calls cherryd-like daemon

- it is installed into a virtualenv but it probably doesn’t make sense in a container

- we outght to adhere to distro standard directory layout (/var/www, /var/logs, etc.), but the extent is in question

If we will present it as an example we must also address init system and other issues [46], in some way, I wrote above.

Questions

For these I can expect the answers only from Sylvain, but others’ ideas are also welcomed.

- Will you review my changes in the fork [5]? Or it’s easier if I’ve created a pull request right away?

- Can you update documentation to explicitly express the state of our SSL support? I think it is needed as soon as possible.

- Do we have any progress on bugtracker?

- As you have already mentioned a CherryPy roadmap, I think, it’s getting a direction, like: 3.7 for QA, 3.8 for SSL.

- I still want to hear about your ideas about test_gc.

- We still have this outdated and poitless wiki home as landing page for the repo.

- Recent coverage report [8] uncovered that we have a dozen with zero-coverage modules which are probably obsolete.

| [1] | https://wiki.python.org/moin/GlobalInterpreterLock |

| [2] | http://www.dabeaz.com/python/NewGIL.pdf |

| [3] | http://en.wikipedia.org/wiki/Integration_testing |

| [4] | http://en.wikipedia.org/wiki/System_testing |

| [5] | (1, 2) https://bitbucket.org/saaj/cherrypy |

| [6] | (1, 2) https://groups.google.com/forum/#!topic/cherrypy-users/Qm-uLscS4z4 |

| [7] | https://drone.io/bitbucket.org/saaj/cherrypy |

| [8] | (1, 2) https://codecov.io/bitbucket/saaj/cherrypy?ref=default |

| [9] | http://tox.rtfd.org/en/latest/example/pytest.html#known-issues-and-limitations |

| [10] | https://launchpad.net/~fkrull/+archive/ubuntu/deadsnakes |

| [11] | http://tox.readthedocs.org/en/latest/config-v2.html |

| [12] | https://bitbucket.org/saaj/cherrypy/src/default/cherrypy/test/xmltestreport.py |

| [13] | http://google-opensource.blogspot.com/2015/03/farewell-to-google-code.html |

| [14] | (1, 2) http://cherrypy.readthedocs.org/en/latest/deploy.html#ssl-support |

| [15] | http://stackoverflow.com/q/29260947/2072035 |

| [16] | (1, 2) https://community.qualys.com/blogs/securitylabs/2011/10/31/tls-renegotiation-and-denial-of-service-attacks |

| [17] | https://datatracker.ietf.org/doc/draft-ietf-tls-downgrade-scsv/ |

| [18] | https://community.qualys.com/blogs/securitylabs/2013/06/25/ssl-labs-deploying-forward-secrecy |

| [19] | https://community.qualys.com/blogs/securitylabs/2014/10/15/ssl-3-is-dead-killed-by-the-poodle-attack |

| [20] | https://community.qualys.com/blogs/securitylabs/2012/09/14/crime-information-leakage-attack-against-ssltls |

| [21] | https://www.python.org/downloads/release/python-279/ |

| [22] | (1, 2) https://www.python.org/dev/peps/pep-0466/ |

| [23] | https://www.python.org/dev/peps/pep-0476/ |

| [24] | https://community.qualys.com/blogs/securitylabs/2013/03/19/rc4-in-tls-is-broken-now-what |

| [25] | http://en.wikipedia.org/wiki/Forward_secrecy |

| [26] | https://community.qualys.com/blogs/securitylabs/2014/09/09/sha1-deprecation-what-you-need-to-know |

| [27] | (1, 2, 3, 4) https://docs.python.org/2/library/ssl.html#ssl-contexts |

| [28] | https://docs.python.org/2/library/ssl.html#ssl.create_default_context |

| [29] | https://hg.python.org/cpython/file/5b5a22b9327b/Lib/ssl.py#l395 |

| [30] | https://urllib3.readthedocs.org/en/latest/security.html#insecureplatformwarning |

| [31] | https://github.com/nabla-c0d3/sslyze |

| [32] | https://community.qualys.com/blogs/securitylabs/2015/01/22/ssl-labs-apis-now-available-in-beta |

| [33] | https://www.ssllabs.com/projects/ssllabs-apis/ |

| [34] | (1, 2) https://www.ssllabs.com/ssltest/ |

| [35] | http://forum.nginx.org/read.php?2,234641,234641 |

| [36] | https://serversforhackers.com/using-ssl-certificates-with-haproxy |

| [37] | https://scriptthe.net/2015/02/08/pass-through-ssl-with-haproxy/ |

| [38] | http://www.xaprb.com/blog/2015/02/07/devops-identity-crisis/ |

| [39] | http://www.youtube.com/watch?v=UP6HxoC66nw |

| [40] | https://groups.google.com/forum/#!topic/cherrypy-users/WywUwX8dGOM |

| [41] | http://www.krisbuytaert.be/blog/docker-vs-reality-0-1#comment-5449 |

| [42] | http://en.wikipedia.org/wiki/Operating-system-level_virtualization |

| [43] | http://twit.tv/show/floss-weekly/330 |

| [44] | http://blog.docker.com/2015/02/orchestrating-docker-with-machine-swarm-and-compose/ |

| [45] | http://www.flockport.com/lxc-vs-docker/ |

| [46] | (1, 2) http://phusion.github.io/baseimage-docker/ |

| [47] | https://zeltser.com/security-risks-and-benefits-of-docker-application/ |

| [48] | http://martinfowler.com/articles/microservices.html |

| [49] | http://www.slideshare.net/jboner/the-road-to-akka-cluster-and-beyond |

| [50] | https://linuxcontainers.org/ |

| [51] | https://coreos.com/blog/rocket/ |

| [52] | http://vagga.readthedocs.org/en/latest/what_is_vagga.html |

| [53] | http://www.defuze.org/ |

| [54] | https://bitbucket.org/saaj/cherrypy-webapp-skeleton |

| [55] | https://bitbucket.org/saaj/cherrypy-webapp-skeleton/src/backend/fabfile.py |

| [56] | https://bitbucket.org/saaj/cherrypy-webapp-skeleton/src/system/docker/Dockerfile |